AI Research Agents: Complete Implementation Guide 2026

Transforming Information Gathering Through Intelligent Automation

Executive Summary

AI research agents represent a paradigm shift in how organizations gather, analyze, and synthesize information. These autonomous systems combine advanced natural language processing, web search capabilities, reasoning engines, and knowledge synthesis to conduct research that previously required hours or days of human effort. This comprehensive guide explores the architecture, implementation, and strategic deployment of AI research agents across industries, providing actionable frameworks for organizations seeking to leverage this transformative technology.

Research agents operate fundamentally differently from traditional search tools or human researchers working alone. Rather than simply retrieving documents based on keyword matching, they understand context and intent, formulate sophisticated sub-questions that decompose complex inquiries, evaluate source credibility through multiple dimensions of trustworthiness, synthesize findings across multiple sources while identifying patterns and contradictions, and present coherent narratives that directly address the original research question. This intelligence allows them to handle complex research tasks that require judgment, iteration, and domain understanding.

The business impact of research agents extends across every knowledge-intensive function. Investment analysts expand coverage from dozens to hundreds of companies without adding headcount. Competitive intelligence teams track real-time market dynamics that previously went unnoticed. Compliance organizations achieve comprehensive regulatory monitoring with reduced risk. R&D teams accelerate innovation cycles through rapid landscape analysis. Strategic planning improves as organizations base decisions on more comprehensive, current information.

Yet implementing research agents successfully requires more than deploying sophisticated technology. Organizations must redesign research workflows around human-AI collaboration, establish quality assurance frameworks that ensure reliable outputs, invest in change management that helps users adopt new ways of working, and create governance structures that manage risks while enabling innovation. This guide provides the frameworks, case studies, and practical guidance needed to navigate that journey from initial assessment through scaled production deployment.

Introduction: The Evolution of Research

The landscape of information gathering has undergone a dramatic transformation over the past three decades. Each wave of innovation has fundamentally changed how we access and process knowledge. The shift from physical card catalogs to digital databases in the 1990s made information more accessible but required learning complex Boolean search syntax. The rise of web search engines in the early 2000s democratized information access through natural language queries, though users still needed to manually sift through thousands of results. The emergence of knowledge graphs and semantic search in the 2010s improved relevance but still placed the burden of synthesis and analysis entirely on human researchers.

AI research agents represent the latest and most transformative leap in this evolution. They don’t just help us find information faster—they fundamentally change the nature of research itself by automating not just information retrieval but also analysis, synthesis, and insight generation. This shift is as significant as the move from manual card catalogs to search engines, but the implications run much deeper into how organizations create and leverage knowledge.

The Research Challenge

Modern research faces unprecedented complexity driven by multiple converging forces. The volume of published information continues its exponential growth, with global data creation doubling approximately every 12 months. Academic research alone produces over 3 million papers annually across tens of thousands of journals. Add to this news articles, social media discussions, corporate announcements, regulatory filings, patent publications, and countless other information sources, and the challenge becomes clear: the information available vastly exceeds any human’s capacity to process.

This information explosion creates several critical problems for researchers. First, coverage gaps emerge as researchers simply cannot monitor all relevant sources. Important developments go unnoticed, competitive threats emerge without warning, and opportunities vanish before organizations even know they existed. Second, recency challenges arise as the time required for comprehensive research means that by the time analysis is complete, the information landscape has already shifted. Third, depth-breadth tradeoffs force researchers to choose between thorough analysis of narrow topics or superficial treatment of broader questions—neither option serves strategic decision-making well.

Consider a typical market research project examining competitive dynamics in a rapidly evolving technology sector. The researcher must synthesize insights from hundreds of sources, including competitor websites, product documentation, pricing pages, social media discussions, news coverage, analyst reports, patent filings, job postings, customer reviews, forum discussions, conference proceedings, and financial disclosures. Each source requires evaluation for credibility, extraction of relevant information, contextualization within broader industry trends, and integration with findings from other sources.

The cognitive load of this work is immense. Researchers must track which sources they have consulted and which remain unexamined. They must identify contradictions between sources and determine which information to trust. They must recognize patterns across disparate sources that might not be obvious when examining any single source. They must maintain objectivity while under pressure to confirm hypotheses or deliver results quickly. They must remember context from sources examined days or weeks earlier when synthesizing findings into final reports.

Traditional research methodologies struggle with these demands in different ways. Manual research by human experts remains the gold standard for quality but simply cannot scale to handle the volume and velocity of modern information needs. A skilled analyst might thoroughly research 20-30 companies over the course of a year, but comprehensive market coverage might require monitoring 200-300 organizations. Automation through keyword alerts and RSS feeds can increase monitoring breadth but generates massive volumes of unfiltered information that still requires human review. Existing analytical tools can aggregate data but lack the contextual understanding needed to separate signal from noise, identify implications, or generate genuine insights.

The AI Agent Solution

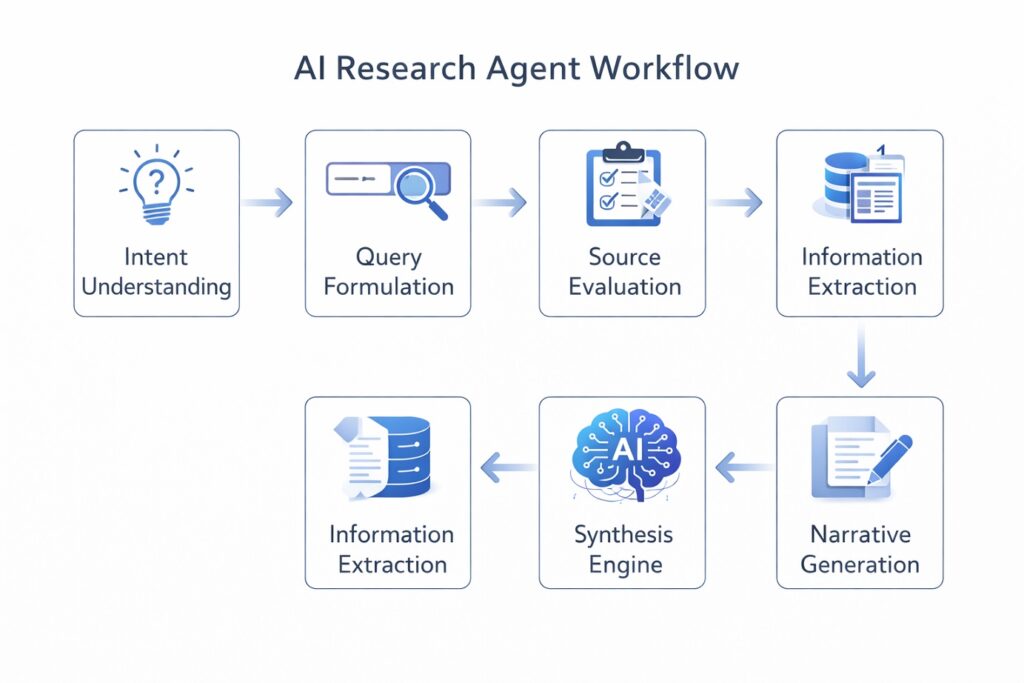

AI research agents address these limitations through a combination of capabilities that mirror and enhance human research processes while operating at machine scale and speed. Understanding how agents work requires examining several key components that work together to deliver intelligent research.

Intent understanding forms the foundation. When given a research question, agents don’t simply match keywords but actually parse the query to identify the core information needed, implicit assumptions, required depth of analysis, and intended use of the research. A question like ‘What are the competitive dynamics in cloud infrastructure?’ triggers agent recognition that the requester likely needs information about major providers, market share trends, differentiation strategies, pricing models, technology capabilities, and customer segment focus—even though these specifics weren’t mentioned. This deep understanding of intent guides every subsequent research step.

Dynamic query formulation represents the next capability layer. Rather than executing a single search, agents generate multiple search strategies designed to surface different types of relevant information. They might search for direct information about competitors, customer discussions comparing alternatives, analyst reports on market trends, news coverage of strategic moves, and technical documentation revealing capability differences. As initial results come in, agents refine their queries based on what they learn. If early searches reveal a particular competitor gaining market share, subsequent queries might focus specifically on that organization to understand its success factors.

Source evaluation provides critical quality control. Agents assess credibility through multiple dimensions rather than treating all information equally. Authority analysis examines author expertise, publication venue reputation, and institutional backing. Independence assessment identifies potential conflicts of interest or sponsored content. Temporal analysis evaluates information currency and identifies superseded content. Citation analysis examines whether claims trace to authoritative sources or represent unsubstantiated assertions. Cross-reference validation checks whether multiple independent sources corroborate findings. This multi-dimensional evaluation happens automatically and consistently, reducing human bias while maintaining high-quality standards.

Information extraction represents the technical core of agent capability. Modern language models can read and understand text with human-like comprehension, identifying key facts, relationships, and implications. Unlike keyword-based extraction that pulls out isolated phrases, agents understand context and can extract structured information even when expressed in varying formats across different sources. They recognize when different terms refer to the same concept, when statements contradict each other, and when information in one source provides context for understanding claims in another source.

The synthesis engine forms the analytical heart of research agents. Here, information from disparate sources gets integrated into coherent narratives that deliver genuine insight rather than mere information aggregation. Synthesis involves several sophisticated capabilities. Pattern recognition identifies recurring themes, trends, or relationships across multiple sources. Contradiction resolution addresses conflicting information by evaluating source quality, considering temporal factors, and identifying the context that explains apparent disagreements. Gap identification recognizes where evidence is thin or absent, flagging areas requiring additional research or acknowledging uncertainty. Causal analysis goes beyond correlation to identify likely cause-and-effect relationships based on temporal sequences, mechanism descriptions, and domain logic. Insight generation produces conclusions that transcend individual sources, connecting dots that might not be obvious when examining any single piece of information.

Narrative generation transforms structured findings into readable reports that serve human decision-making. Agents organize information logically, provide appropriate context, cite sources transparently, qualify claims with appropriate certainty levels, and highlight areas of contradiction or uncertainty. The narrative format makes research accessible to decision-makers who need to quickly understand findings and their implications without wading through raw source material.

Continuous learning represents the final critical capability. As agents conduct research and receive feedback on quality, relevance, and usefulness, they improve their performance over time. This learning happens at multiple levels, including which sources prove most reliable for different question types, which query strategies yield the best results, which analytical frameworks provide the most insight, and which presentation formats best serve different types of users. Organizations that systematically capture and apply these learnings build agents that become progressively more valuable as they accumulate experience.

Who Benefits from Research Agents

Research agents deliver value across diverse organizational contexts, though implementation patterns and success factors vary by use case. Understanding who benefits and how they benefit helps organizations identify high-value applications within their specific context.

Investment professionals, including portfolio managers, equity analysts, and credit analysts, face constant pressure to expand coverage while maintaining analytical depth. Research agents enable analysts to monitor far more companies than traditional methods allow, providing continuous surveillance that catches material developments in real-time rather than weeks later when reviewing quarterly results. Agents track earnings calls, SEC filings, news coverage, management changes, customer reviews, and social sentiment, generating alerts when significant changes occur and periodic summaries synthesizing trends. This expanded coverage doesn’t replace deep human analysis but extends analyst reach, allowing them to maintain awareness of a broader investable universe while focusing intensive effort on highest-conviction ideas.

Competitive intelligence teams traditionally struggle with the volume and velocity of competitive information. Competitors constantly update products, change pricing, announce partnerships, adjust positioning, and shift strategies—most of which happen without formal announcement. Research agents continuously monitor competitor digital footprints, including websites, documentation, social media, job postings, and news coverage, detecting changes as they occur. This real-time intelligence allows companies to respond quickly to competitive moves rather than discovering changes weeks later through customer conversations or quarterly business reviews. Teams shift from manually gathering information to strategically interpreting intelligence and developing competitive responses.

Compliance and legal professionals face growing regulatory complexity as governments worldwide increase oversight across industries. Regulations change frequently, with new rules, guidance documents, enforcement actions, and interpretations published constantly across federal, state, and international jurisdictions. Research agents monitor regulatory sources, assess relevance to organizational activities, identify compliance obligations, and flag items requiring legal review. This comprehensive monitoring provides assurance that important regulatory changes won’t be missed while freeing compliance staff to focus on risk assessment and control implementation rather than information gathering.

Strategy and corporate development teams need comprehensive market intelligence to inform decisions about market entry, acquisitions, partnerships, and strategic pivots. Traditional market research through specialized firms delivers high quality but high cost and long timelines, limiting research to major decisions. Research agents enable rapid exploratory research that informs whether to commission expensive primary research, provides baseline understanding before entering new domains, identifies potential partners or acquisition targets, and monitors market dynamics to detect strategic inflection points. This faster, more affordable research doesn’t replace deep strategic analysis but expands the aperture of strategic consideration.

Product and R&D teams benefit from continuous technology and market monitoring that identifies emerging capabilities, shifting customer needs, and competitive innovations. Agents track technical publications, patent filings, open source projects, customer discussions, and product releases, synthesizing findings into intelligence that informs product roadmaps. Teams learn about new technical approaches, discover unmet customer needs, and understand competitive feature velocity without dedicating researcher headcount to continuous monitoring. This intelligence helps organizations stay ahead of market evolution rather than discovering important trends after competitors have already moved.

Sales and marketing organizations leverage research agents for prospect research, account intelligence, and content development. Agents can rapidly research prospects before sales calls, providing background on company strategy, challenges, decision-makers, and technology stack. Marketing teams use agents to research content topics, identify emerging trends worth addressing, and understand audience interests. This research enables more personalized outreach, more relevant content, and a better understanding of customer contexts.

20+ Research Prompts: Patterns and Strategies

Effective prompt engineering separates exceptional research agents from mediocre ones. Well-crafted prompts elicit focused investigation, comprehensive analysis, and actionable insights, while poor prompts yield generic, superficial, or tangential research that wastes computational resources without delivering value. The following collection represents battle-tested patterns across diverse research scenarios. Each prompt template embodies specific structural principles that maximize agent performance.

Market Intelligence Prompts

Market intelligence research requires understanding competitive dynamics, customer behavior, and industry evolution. Effective market intelligence prompts specify analytical frameworks, priority information, and strategic context while remaining open to unexpected findings that might reshape strategic understanding.

1. Competitive Landscape Analysis

Prompt: “Conduct a comprehensive competitive analysis of [INDUSTRY] focusing on the top 5-7 players. For each competitor, identify their market positioning, recent strategic moves in the past 12 months, technology stack and capabilities, pricing model and strategy, target customer segments, and unique value propositions. Analyze market share trends over the past 3 years, identifying winners and losers with specific data points. Assess competitive dynamics including barriers to entry, threat of substitutes, supplier power, and buyer power. Evaluate likely future consolidation scenarios. Synthesize findings into strategic implications for a new market entrant, including recommended positioning, go-to-market strategy, and areas to avoid.”

Why it works: This prompt combines structural analysis (Porter’s Five Forces framework) with specific deliverables while maintaining outcome focus. The temporal dimension ensures current relevance, and the synthesis requirement forces integration rather than simple list-making. By specifying the ultimate use case (new market entrant), it ensures research addresses actual strategic needs.

2. Emerging Trend Identification

Prompt: “Identify emerging trends in [TECHNOLOGY/MARKET] that are currently in early adoption phase but show potential for mainstream impact within 2-3 years. For each trend, document early indicators including patent activity, pilot programs, venture capital funding patterns, and thought leader discussions. Assess technology maturity using the Gartner Hype Cycle or similar framework. Identify key technical enablers and remaining blockers to adoption. Profile early adopters including their characteristics, use cases, and reported outcomes. Estimate total addressable market at maturity. Analyze which trends exhibit network effects or platform characteristics. Prioritize trends by likely impact on [SPECIFIC BUSINESS CONTEXT] considering both upside opportunity and disruption risk.”

Why it works: The prompt establishes clear criteria for trend qualification while requiring evidence-based assessment across multiple dimensions. The prioritization requirement ensures comparative analysis that guides resource allocation. Specificity about assessment frameworks gives agents concrete evaluation methods.

3. Customer Research Deep Dive

Prompt: “Research [TARGET CUSTOMER SEGMENT] to understand their current challenges, unmet needs, and decision-making processes around [PRODUCT CATEGORY]. Analyze their typical workflow, documenting pain points at each stage with specific examples. Examine budget allocation patterns and spending priorities. Identify the evaluation criteria they use when selecting solutions, ranked by importance. Assess switching costs, including technical, financial, and organizational factors. Map the language and terminology they use when discussing this problem space. Identify trusted information sources they consult during research. Analyze peer influence dynamics and reference customer importance. Map the buying committee structure, including typical roles, their individual concerns, and influence on purchase decisions. Synthesize findings into detailed buyer personas using jobs-to-be-done frameworks that explain the functional, emotional, and social dimensions of their needs.”

Why it works: This prompt drives deep customer understanding by examining multiple dimensions of customer behavior and psychology. The jobs-to-be-done framework prevents surface-level demographic descriptions in favor of need-based segmentation. Specific attention to language and terminology helps organizations communicate effectively with prospects.

Technical Research Prompts

4. Technology Stack Evaluation

Prompt: “Evaluate [TECHNOLOGY/FRAMEWORK] for use in [SPECIFIC APPLICATION CONTEXT] at [SCALE]. Assess technical capabilities against our requirements including performance characteristics, scalability limits with specific benchmarks, security features and known vulnerabilities, integration complexity with our existing systems, learning curve for our team, quality and responsiveness of community support, and total cost of ownership including licensing, infrastructure, and maintenance. Compare against [ALTERNATIVE SOLUTION 1] and [ALTERNATIVE SOLUTION 2] using a decision matrix with weighted criteria. Identify companies successfully using this technology at similar or greater scale, documenting their implementation patterns, architectural decisions, and lessons learned from production experience. Highlight any gotchas or surprises they encountered. Provide a go/no-go recommendation with confidence level, risk assessment including technical and organizational risks, and migration considerations if switching from current solution.”

Why it works: The prompt demands practical, implementation-focused research rather than abstract capability descriptions. Requiring real-world case studies at a relevant scale provides crucial validation. The comparison framework ensures systematic evaluation rather than piecemeal assessment.

5. Architecture Pattern Research

Prompt: “Research architectural patterns for [SYSTEM TYPE] that handle [SCALE/COMPLEXITY REQUIREMENT]. Focus on proven approaches from high-traffic production systems processing similar workloads. For each pattern, document key architectural components and their responsibilities, data flow and communication patterns, scaling characteristics including bottlenecks and scaling limits, failure modes and recovery mechanisms, operational complexity and required tooling, and cost implications at different scales. Include real-world case studies with specific performance metrics, traffic volumes, team sizes, and operational practices. Identify anti-patterns and common implementation mistakes that lead to production issues. Document the tradeoffs between different patterns. Provide a decision framework for pattern selection based on specific requirements including traffic patterns, consistency needs, operational maturity, and budget constraints.”

Why it works: This prompt focuses on battle-tested approaches rather than theoretical possibilities. Emphasis on failure modes and anti-patterns provides crucial practical knowledge. The decision framework ensures research translates into actionable guidance.

6. Security Vulnerability Assessment

Prompt: “Research known vulnerabilities, attack vectors, and security best practices for [TECHNOLOGY/SYSTEM]. Compile recent CVEs with CVSS scores, documented exploits in the wild, and security advisories from the past 24 months. Analyze common misconfiguration issues that create vulnerabilities, focusing on default settings that should be changed. Assess supply chain risks, including dependencies and their security track record. Document authentication and authorization weaknesses, including common implementation mistakes. Review security audit findings from organizations running similar systems. Identify required security controls and compensating controls for identified risks. Document monitoring and detection approaches for potential attacks. Provide incident response procedures for likely scenarios. Prioritize recommendations by risk severity and implementation complexity, creating a phased security hardening roadmap.”

Why it works: The prompt balances theoretical vulnerabilities with practical implementation guidance. Temporal boundaries ensure current relevance for the rapidly evolving security landscape. Prioritization enables phased implementation based on resources.

Academic and Scientific Research Prompts

7. Literature Review Synthesis

Prompt: “Conduct a systematic literature review on [RESEARCH TOPIC] covering peer-reviewed publications from the past 5 years. Identify key theoretical frameworks researchers are using to understand this phenomenon. Document methodological approaches including research designs, data collection methods, sample characteristics, and analytical techniques. Synthesize major findings and conclusions, noting areas of consensus versus active debate. Track how understanding has evolved over this period, identifying shifts in theoretical perspectives or methodological innovations. Map the research community including influential authors with their h-index and citation counts, key institutions and research groups, and citation networks showing how ideas flow. Identify gaps in current literature including under-researched populations, methodological limitations, and unexplored research questions. Propose promising directions for future research based on identified gaps and emerging trends. Synthesize findings into a structured literature review organized thematically rather than chronologically.”

Why it works: This prompt drives a systematic rather than haphazard literature review. Attention to methodological details enables critical evaluation. The thematic organization requirement ensures synthesis rather than mere summarization.

8. Cross-Disciplinary Insight Mining

Prompt: “Research how concepts from [DISCIPLINE A] have been applied to problems in [DISCIPLINE B]. Identify successful cross-pollination examples, documenting the original concept, the adaptation made, and outcomes achieved. Analyze underlying principles that transferred effectively versus those that required significant modification. Assess which concepts from [DISCIPLINE A] remain unexplored in [DISCIPLINE B] but show promise based on problem structure similarities. Document specific research papers, patents, or commercial implementations demonstrating cross-disciplinary innovation. Identify barriers that have prevented broader cross-pollination including terminology differences, institutional silos, or methodological incompatibilities. Map potential bridges between disciplines including shared conferences, cross-departmental research centers, or interdisciplinary journals. Propose 3-5 novel applications of [DISCIPLINE A] concepts to [DISCIPLINE B] problems, explaining the theoretical basis for why transfer should work.”

Why it works: This prompt encourages genuine innovation through disciplinary boundary crossing. By requiring analysis of why transfers succeed or fail, it builds transferable knowledge. The proposal requirement pushes beyond observation to creative synthesis.

Legal and Regulatory Research Prompts

9. Regulatory Landscape Analysis

Prompt: “Map the regulatory landscape for [INDUSTRY/ACTIVITY] across [JURISDICTIONS]. Identify all applicable regulations including primary legislation, implementing regulations, and guidance documents. Document licensing requirements, compliance obligations with timelines, reporting requirements, and record-keeping mandates. Analyze enforcement patterns over the past 3 years including common violations, penalty ranges, and factors influencing enforcement decisions. Track recent regulatory changes identifying the direction of travel toward more or less restrictive regimes. Document pending legislation and rulemaking that could impact operations, assessing the likelihood of passage and the implementation timeline. Analyze regulatory trends including areas of increasing regulatory focus, coordination between regulators, and political factors driving policy. Estimate compliance costs for different operational scenarios including initial compliance investment and ongoing costs. Assess regulatory risk based on enforcement history and operational profile. Provide a compliance roadmap with prioritized actions, resource requirements, and timeline.”

Why it works: This prompt drives comprehensive regulatory understanding beyond simple rule identification. Attention to enforcement patterns provides crucial practical insight. The roadmap requirement ensures actionable output.

10. Case Law Analysis

Prompt: “Research case law related to [LEGAL ISSUE] with focus on [JURISDICTION]. Identify landmark cases that established key precedents, documenting holdings, reasoning, and subsequent impact. Analyze how judicial interpretation has evolved over time. Track current precedent across different jurisdictions, noting circuit splits or regional variations in interpretation. Examine how courts have ruled on key legal questions including [SPECIFIC LEGAL QUESTIONS], documenting reasoning and outcomes. Identify factors that influenced judicial decisions including fact patterns, procedural postures, judge composition, and amicus briefs. Assess litigation risk for [SPECIFIC BUSINESS ACTIVITY] based on case law patterns and fact comparison. Identify unsettled legal questions where case law provides limited guidance. Document both favorable and unfavorable cases, analyzing distinguishing factors. Provide litigation strategy recommendations including arguments likely to succeed, risks to manage, and jurisdiction considerations.”

Why it works: The prompt demands nuanced legal analysis rather than simple case citations. Attention to reasoning helps predict how courts might rule on novel situations. Risk assessment connects research to business decisions.

Strategic Planning and Due Diligence Prompts

11. M&A Target Assessment

Prompt: “Conduct preliminary due diligence on [TARGET COMPANY] for potential acquisition. Research the company background including founding history, ownership structure, funding history, and previous transactions. Document product portfolio with revenue contribution by product, customer base with concentration analysis, and competitive position in served markets. Analyze financial performance trends over 3-5 years including revenue growth, profitability, margins, and cash flow. Assess technology assets including proprietary technology, intellectual property portfolio, technical debt indicators, and development capabilities. Evaluate key personnel including leadership team backgrounds, technical talent depth, and retention risks. Analyze cultural characteristics including values, work practices, and cultural fit with the acquirer. Identify potential risks including customer concentration, regulatory exposure, technology obsolescence, key person dependencies, pending litigation, and competitive threats. Review recent news and social media for reputation issues or red flags. Synthesize findings into an investment thesis explaining strategic rationale, value creation opportunities, and integration challenges. Provide a prioritized list of key diligence items requiring deep investigation.”

Why it works: This prompt structures preliminary diligence comprehensively while remaining focused on investment decision needs. Risk identification ensures balanced assessment. The prioritized diligence list enables efficient next steps.

12. Market Entry Strategy

Prompt: “Research market entry strategies for [PRODUCT/SERVICE] into [TARGET MARKET]. Analyze market size with TAM/SAM/SOM breakdown, growth rate and drivers, and competitive intensity using Porter’s Five Forces. Evaluate entry mode options including direct sales, channel partnerships, strategic alliances, acquisition, and licensing, comparing the advantages and disadvantages of each. Assess regulatory requirements including licenses, approvals, and compliance obligations. Identify cultural considerations affecting product-market fit, business practices, and customer expectations. Document localization needs including language, features, and go-to-market adaptation. Research successful and failed market entries by comparable companies in the past 5 years, documenting strategies used, execution challenges, and lessons learned. Map potential partners with capabilities assessment, distribution channels with coverage and economics, and pilot opportunities for market testing. Analyze competitive responses to new entrants and defensive strategies we should anticipate. Provide recommended entry strategy with detailed rationale, timeline with key milestones, resource requirements including investment and team, and success metrics for evaluation.”

Why it works: This prompt drives strategic thinking beyond simple opportunity assessment. Comparison of entry modes with tradeoffs enables informed decisions. Learning from precedents reduces risk of repeating others’ mistakes.

Innovation and Product Development Prompts

13. User Need Discovery

Prompt: “Research unmet needs and friction points for [USER SEGMENT] in [ACTIVITY/DOMAIN]. Analyze user forums, support tickets, social media discussions, product reviews, and Q&A sites to identify recurring complaints and pain points. Map the user journey from initial awareness through ongoing usage, highlighting friction at each stage with specific examples and frequency estimates. Identify jobs-to-be-done that current solutions address poorly or not at all. Document workaround behaviors users have developed to compensate for solution shortcomings. Analyze willingness to pay signals including discussions of pricing, comparisons of alternatives, and expressed frustration levels. Assess competition from adjacent categories or alternative approaches users consider. Identify segments with particularly acute pain who might be early adopters. Synthesize findings into opportunity areas ranked by user pain severity measured by frequency and intensity, market size estimated through user population, and competitive intensity. For top opportunities, propose potential solution approaches with validation strategies.”

Why it works: This prompt mines authentic user voice rather than relying on surveys that suffer from response bias. Jobs-to-be-done framing reveals underlying needs versus stated wants. The prioritization framework enables resource allocation.

14. Feature Prioritization Research

Prompt: “Research feature requests and usage patterns for [PRODUCT CATEGORY] to inform roadmap prioritization. Analyze competitor feature sets creating a competitive feature matrix, identifying table stakes features required for consideration, differentiating features that drive selection, and emerging features appearing in newest products. Mine user feedback sources including feature request forums, support tickets, sales win/loss analysis, and user interviews for feature requests. For each requested feature, document frequency of requests, user segments requesting it, stated use cases and benefits, and urgency indicators. Research feature usage patterns in existing products through published analytics, case studies, or leaked metrics. Assess which features drive user adoption, engagement, retention, and expansion based on product-led growth companies. Evaluate technical implementation complexity and estimated time-to-market for requested features. Analyze monetization potential including willingness to pay and tier placement. Provide feature prioritization framework balancing user value, competitive positioning, development investment, and strategic importance. Apply framework to top 20 requested features with detailed scores and recommendations.”

Why it works: This prompt combines multiple data sources for robust prioritization. Attention to both stated requests and revealed preferences through usage provides balanced view. The framework creates repeatable prioritization process.

Crisis and Risk Management Prompts

15. Reputational Risk Assessment

Prompt: “Assess reputational risks associated with [BUSINESS DECISION/ACTIVITY]. Research similar actions by other organizations in the past 5 years, documenting stakeholder reactions across customer, employee, investor, regulator, and advocacy group constituencies. Analyze media coverage patterns including tone, volume, and persistence of negative coverage. Track social media sentiment including viral moments, influencer reactions, and lasting perception changes. Assess long-term reputation impact through brand tracking data, customer loyalty metrics, and talent acquisition effects where available. Identify likely criticism narratives based on precedent and current social/political climate. Document opposition tactics used against similar actions including boycotts, divestment campaigns, regulatory complaints, and lawsuit strategies. Evaluate our organizational resilience including crisis response capabilities, stakeholder relationships, and reputation recovery mechanisms. Identify potential allies who might defend the action publicly. Provide risk mitigation recommendations including communication strategies, stakeholder engagement approaches, and monitoring indicators for early warning of backlash.”

Why it works: This prompt drives realistic risk assessment through precedent analysis. A multi-stakeholder perspective ensures a comprehensive view. Mitigation focus makes research actionable rather than merely descriptive.

Specialized Domain Prompts

16. Supply Chain Intelligence

Prompt: “Map the supply chain for [PRODUCT/COMPONENT] identifying tier 1 suppliers, tier 2 suppliers, and critical tier 3 suppliers. Document supplier concentration risks including single-source dependencies and limited alternative sources. Analyze geographic dependencies including manufacturing concentration in specific regions or countries. Research recent supply chain disruptions in this category from the past 3 years, documenting causes including natural disasters, geopolitical events, labor actions, and capacity constraints, duration and business impact, and recovery strategies that proved effective. Assess emerging risks including geopolitical tensions, regulatory changes such as export controls, climate-related risks to key production regions, and supplier financial distress. Evaluate supplier financial stability and operational capabilities through available financial reports, credit ratings, and operational metrics. Identify alternative sourcing options including qualification requirements, lead times, and cost implications. Provide supply chain resilience recommendations including diversification strategies, inventory optimization approaches, and supplier relationship investments.”

Why it works: This prompt drives practical supply chain risk management through multi-tier visibility. Historical disruption analysis provides a concrete risk context. Recommendations balance resilience with cost efficiency.

17. Sustainability and ESG Research

Prompt: “Research environmental, social, and governance practices in [INDUSTRY] to benchmark [COMPANY] against peers and leaders. Analyze ESG reporting standards including frameworks used such as GRI, SASB, TCFD, disclosure levels, and third-party verification practices. Document stakeholder expectations including investor ESG integration, customer sustainability requirements, employee expectations, and regulatory compliance obligations. Assess leading company practices including carbon reduction targets and progress, renewable energy adoption, diversity and inclusion initiatives with representation metrics, governance structures and board composition, supply chain responsibility programs, and community investment approaches. Evaluate investor perspectives on ESG performance including ESG fund inclusion criteria, valuation impact research, and engagement priorities. Identify material ESG risks and opportunities specific to [BUSINESS MODEL] through materiality assessments and industry-specific factors. Analyze our current performance against benchmarks, identifying gaps and areas of leadership. Provide ESG improvement roadmap with specific initiatives, metrics and targets, implementation timeline, and resource requirements. Prioritize actions by materiality, stakeholder importance, and implementation feasibility.”

Why it works: This prompt connects ESG to business strategy rather than treating it as a compliance exercise. Materiality focus ensures resource allocation to the highest-impact areas. Competitive benchmarking provides context for goal-setting.

Additional Research Prompts

18. Partnership Opportunity Identification

Prompt: “Identify potential strategic partners for [BUSINESS OBJECTIVE] in [INDUSTRY/GEOGRAPHY]. Research organizations with complementary capabilities that fill gaps in our offering, aligned strategic priorities based on public statements and observed behavior, and cultural compatibility assessed through values, work practices, and past partnership track record. Assess partner candidates on market access they provide to customer segments or geographies, technology assets including IP and development capabilities, customer relationships and brand strength, and financial stability and investment capacity. Analyze their current partnership portfolio and strategy, partnership track record including successful and failed partnerships, and strategic priorities based on recent moves. Map potential value creation mechanisms including revenue synergies, cost synergies, capability building, and market position strengthening. Evaluate partnership structures from loose collaboration to joint venture, comparing advantages and risks. Identify potential friction points including competing products, conflicting partnerships, or misaligned incentives. Prioritize opportunities by strategic fit scored across multiple dimensions, value creation potential with estimated economics, and likelihood of successful collaboration based on cultural fit and incentive alignment. For top opportunities, provide partnership approach recommendations.”

19. Technology Adoption Readiness

Prompt: “Assess organizational readiness to adopt [TECHNOLOGY] for [USE CASE]. Evaluate technical readiness including current infrastructure compatibility, required new capabilities, skill gaps in the current team, and integration with existing systems. Assess organizational readiness including stakeholder support levels, cultural factors affecting adoption, change management capacity, and competing priorities. Document resource requirements including implementation costs, ongoing operational costs, and opportunity costs of team time. Research adoption timelines from comparable organizations, identifying factors that accelerated or delayed adoption. Analyze adoption risks including technical risks, organizational risks, vendor risks if applicable, and opportunity cost of delaying adoption. Identify adoption prerequisites that must be addressed first. Map stakeholder concerns across different organizational levels and functions. Provide a phased adoption roadmap starting with pilot or proof of concept, expanding to production use, and scaling across the organization. For each phase, specify success criteria, resource requirements, timeline, and key risks.”

20. Pricing Strategy Research

Prompt: “Research pricing strategies for [PRODUCT/SERVICE] in [MARKET]. Analyze competitive pricing including price points across tiers, packaging and bundling approaches, contract terms and flexibility, and discount patterns. Assess value metrics that correlate with willingness to pay through customer research, revealed preferences, and pricing experiments where published. Research pricing models including usage-based, subscription, freemium, and transaction-based approaches, evaluating advantages and disadvantages for our context. Document price sensitivity by customer segment including enterprise versus SMB, geographic differences, and industry variations. Analyze pricing evolution over time in this category, identifying trends toward higher or lower prices and changing value propositions. Research price anchoring strategies and positioning. Evaluate pricing page optimization including information architecture, comparison tables, and call-to-action design. For each viable pricing model, estimate revenue implications across different customer adoption scenarios. Provide recommended pricing strategy with detailed rationale, testing approach to validate assumptions, and metrics for ongoing optimization.”

21. Talent Market Intelligence

Prompt: “Research the talent market for [ROLE/SKILL SET] in [GEOGRAPHY]. Analyze talent supply including university programs producing relevant graduates, bootcamps and alternative education, and talent pools in adjacent roles who could transition. Assess talent demand through job posting volume trends, hiring velocity at major employers, and competition for senior versus entry-level talent. Document compensation packages including salary ranges by experience level, equity compensation norms, benefits expectations, and geographic variations. Research retention challenges including average tenure, common departure reasons, and competitive poaching patterns. Identify talent attraction factors beyond compensation including remote work policies, learning opportunities, mission alignment, and cultural factors. Map talent communities including conferences, online forums, open source projects, and professional associations. Analyze the employer branding strategies of successful talent attractors. Provide talent acquisition strategy recommendations including sourcing channels, compelling value proposition, competitive compensation positioning, and retention initiatives.”

Prompt Engineering Principles

Effective research prompts share common characteristics that maximize agent performance regardless of specific domain or question type. Understanding these principles enables organizations to craft powerful prompts for their unique needs.

Specificity: Vague requests yield generic responses while precise parameters guide focused investigation. Rather than ‘research competitors,’ specify ‘identify top 5 competitors by market share, documenting their pricing models, target customers, and product roadmap signals from the past 6 months.’ Specific requests enable agents to determine when they have gathered sufficient information versus open-ended queries that never quite feel complete.

Context framing: Establishing why research matters helps agents prioritize relevant information and ignore tangential findings. Context includes intended use of research, such as supporting M&A decisions, informing product roadmaps, or assessing regulatory risk, a decision timeline indicating urgency and depth required, and constraints like budget limitations or resource availability. With proper context, agents can make intelligent tradeoffs between speed and comprehensiveness.

Structural guidance: Providing frameworks for organizing findings creates consistency and completeness. Frameworks might include analytical models like SWOT, Porter’s Five Forces, or jobs-to-be-done, information categories such as technology, market, competition, regulation, evaluation dimensions for comparing alternatives, or narrative structures like problem-solution-implications. Structural guidance prevents agents from producing unorganized information dumps.

Outcome orientation: Focusing on decisions or actions that research will inform ensures practical relevance. Outcome-oriented prompts specify whether research should support go/no-go decisions, rank alternatives, identify risks, uncover opportunities, or validate hypotheses. By knowing how research will be used, agents can emphasize actionable insights over interesting but irrelevant information.

Source guidance: Specifying preferred source types, recency requirements, and authority standards improves research quality. Source guidance might include prioritizing primary sources over secondary interpretation, requiring peer-reviewed research for scientific claims, specifying recency thresholds like ‘published within 2 years,’ or identifying authoritative sources by name. Clear source expectations enable agents to filter massive information spaces efficiently.

Constraint specification: Defining boundaries prevents agents from pursuing tangential paths. Constraints include temporal scope such as ‘focus on developments since 2020,’ geographic scope, like ‘North American market only,’ industry scope such as ‘enterprise software companies,’ or complexity limits like ‘high-level overview suitable for executive audience.’ Well-defined constraints keep research focused without sacrificing important context.

Synthesis requirements embedded in prompts drive insight generation rather than mere aggregation. Effective prompts explicitly request pattern identification, contradiction resolution, causal analysis, gap identification, prioritization, or recommendations. Without synthesis requirements, agents may simply compile information without adding analytical value.

For more Prompt engineering techniques, you can check our article AI Prompt Engineering Guide for Marketing.

Case Studies: AI Research Agents in Action

Real-world implementations reveal both the transformative potential and practical challenges of AI research agents. These case studies span industries and use cases, documenting measurable impacts while highlighting lessons learned from deployments at scale. Each case provides implementation details, performance metrics, and strategic insights applicable to similar scenarios.

Case Study 1: Investment Research at Global Asset Manager

Background: A $50 billion asset management firm struggled with research coverage depth and breadth. The firm employed 25 equity analysts, each capable of deeply researching 20-30 companies annually through fundamental analysis, including financial modeling, management interviews, site visits, and industry analysis. However, comprehensive market coverage would require monitoring 500+ companies across their investable universe. This coverage gap meant analysts missed important developments at companies outside their core coverage, reducing their ability to identify new investment opportunities or detect emerging risks to existing positions. Markets moved faster than human analysts could track, creating information asymmetry that disadvantaged the firm versus larger competitors with more analyst resources.

Implementation: The firm deployed research agents to provide continuous monitoring of its full investable universe. Agents tracked multiple information sources including quarterly and annual earnings releases, SEC filings such as 10-Ks, 10-Qs, and 8-Ks, press releases and company announcements, news coverage from financial and industry press, sell-side analyst reports from major investment banks, earnings call transcripts with sentiment analysis, management interviews and conference presentations, social media discussions about companies and products, patent filings indicating innovation direction, and job postings revealing strategic priorities. Agents generated daily briefings highlighting significant developments across the universe with materiality scoring, weekly deep-dive reports on specific companies showing unusual activity, quarterly comprehensive competitive analysis for each major position, and real-time alerts for material events requiring immediate analyst attention such as earnings surprises, management changes, or major strategic announcements.

Technical Architecture: The system combined multiple AI models in a hierarchical structure designed for efficiency and accuracy. The filtering layer processed thousands of daily information items using lightweight classification models to identify potentially material information based on content type, source authority, novelty, and preliminary sentiment. Items flagged as potentially material flowed to analysis agents using advanced language models to extract key facts structured by category, assess materiality using learned patterns from analyst feedback, identify connections to existing investment themes and positions, and flag contradictions with previous information. Synthesis agents aggregated findings across sources and time periods, generating coherent narratives while preserving important nuance, identifying patterns across multiple companies suggesting industry trends, flagging contradictions requiring analyst review, and producing natural language summaries with appropriate caveats. The system maintained a knowledge graph connecting companies, products, markets, executives, and events, enabling sophisticated relationship queries. Feedback mechanisms captured analyst assessment of agent output quality, feeding continuous model improvement.

Results: Research coverage increased by 300% without adding analyst headcount, expanding from 150 actively monitored companies to 600. Average response time to material news events decreased from 4 hours to 15 minutes, enabling faster trading decisions. Analysts reported spending 60% less time on information gathering and administrative tasks, redirecting this time to high-value analysis, company management engagement, and investment decision-making. User satisfaction among analysts reached 85% after initial skepticism, with particular appreciation for comprehensive earnings call analysis and competitive intelligence. The firm identified 15 investment opportunities in the first year that would have been missed under the previous research model, generating $42 million in additional alpha. Risk management improved as the system flagged deteriorating fundamentals at 8 positions ahead of broader market recognition, enabling early exits that avoided $18 million in losses. Total calculated value from improved returns and avoided losses exceeded $60 million against $8 million in system development and operational costs.

Key Lessons: Success required clear role definition between humans and AI, with agents handling monitoring and pattern recognition while humans focused on judgment and strategy. The firm invested heavily in agent output quality metrics including false positive rate under 5%, materiality assessment accuracy validated against analyst review, citation accuracy verified through sampling, and synthesis quality evaluated through rubrics. They learned that agent performance degraded when monitoring too many disparate industries simultaneously, requiring specialization by sector for optimal results. The most valuable agent capability proved to be comprehensive monitoring rather than deep analysis, as analysts still wanted to perform detailed investigation themselves but needed awareness of what required investigation. Integration with existing analyst workflows through familiar tools proved critical for adoption, as standalone systems created friction. Transparency about agent reasoning and uncertainty built trust, while black-box outputs generated skepticism. Continuous improvement through analyst feedback loops proved essential, with quality improving 40% over the first year through learning from corrections.

Case Study 2: Competitive Intelligence for Technology Company

Background: A $200 million ARR SaaS company competing in the marketing automation space faced competitive threats from both established players like Adobe and Salesforce and emerging startups with innovative approaches. Their competitive intelligence team of 3 people manually tracked 25 competitors through competitor websites, pricing pages, feature documentation, review sites, social media, and news coverage. However, this labor-intensive process meant most analysis was weeks or months old by publication time. Sales teams frequently encountered competitor features, pricing changes, or positioning shifts they hadn’t seen documented in competitive battle cards. Win/loss analysis revealed that sales reps lacked current competitive intelligence in 40% of competitive situations. The manual process also limited coverage to major competitors, missing emerging threats from well-funded startups until they became significant revenue threats.

Implementation: Research agents provided comprehensive competitive monitoring across 75 competitors through automated tracking of competitor websites with daily change detection, product documentation and API references, pricing and packaging changes, job postings revealing strategic priorities and team growth, social media presence and messaging, news coverage and press releases, customer reviews on G2, Capterra, and TrustRadius, forum discussions comparing alternatives, patent filings indicating innovation direction, and conference presentations and webinars. Agents generated weekly competitive intelligence briefings synthesizing major developments, maintained living competitive battle cards with automatic updates, provided sales teams with just-in-time competitor intelligence during active deals through integration with CRM, and created monthly strategic analysis identifying competitive trends and threats. The system implemented a natural language interface allowing sales reps to ask questions like ‘How does Competitor X handle email personalization?’ and receive current, cited responses.

Results: Competitive coverage expanded to 75 companies including 50 previously untracked startups. Information freshness improved dramatically, with the average age of competitive intelligence decreasing from 60 days to 3 days. Sales win rates against the top 3 competitors increased by 12 percentage points over 6 months, attributed to better competitive positioning based on current intelligence. Sales rep utilization of competitive intelligence tools increased from 25% to 78% due to easy access and current information. The company detected and responded to a major competitor’s pricing change within 24 hours, preventing significant customer churn through immediate competitive response. The product team identified 8 competitive features gaining traction before they became table stakes, accelerating the roadmap response. Marketing adjusted positioning messaging 6 weeks faster than previous capability based on competitive messaging shifts. The 3-person competitive intelligence team redirected 70% of their time from information gathering to strategic analysis and sales enablement, dramatically increasing their strategic impact.

Key Lessons: Integration with sales workflows proved critical for adoption, with CRM integration and Slack bot interface driving usage far more than the standalone portal. Initial resistance from sales teams viewing AI-generated intelligence skeptically disappeared once they experienced timely, accurate insights during competitive situations. The team learned to focus agents on tracking changes rather than static information, as delta detection provided higher value than comprehensive but static profiles. Change detection algorithms needed careful tuning to avoid alert fatigue, requiring materiality scoring that distinguished significant changes from minor updates. Human curation remained important for translating intelligence into sales guidance, as raw information required contextualization for front-line teams. The most valuable agent capability was continuous monitoring across a broad competitor set rather than deep analysis of individual competitors, enabling the team to maintain awareness that they couldn’t achieve manually. Natural language interface lowered adoption barriers significantly versus structured queries or navigation. Quality control through spot-checking and user feedback drove continuous improvement, with accuracy improving from 82% initially to 94% after 6 months of feedback-driven enhancement. The company realized that competitive intelligence agents provided not just efficiency but fundamentally new capability through the breadth of coverage impossible with human-only approaches.

Case Study 3: Regulatory Compliance for Financial Services

Background: A regional bank with $15 billion in assets operating across 15 states faced complex, evolving regulatory requirements from multiple sources including federal banking regulators like OCC, FDIC, Federal Reserve, state banking departments across 15 jurisdictions, CFPB for consumer protection, FinCEN for anti-money laundering, SEC for securities activities, and various state consumer protection agencies. Compliance staff spent 40% of their time manually reviewing regulatory updates through Federal Register monitoring, regulator website checks, industry association alerts, and legal firm newsletters. Despite this effort, the manual process created a risk of missing important updates while consuming resources that could address higher-priority compliance activities like control testing and risk assessment. Two previous instances where important regulatory updates were missed for several weeks created significant remediation costs and regulatory scrutiny.

Implementation: Research agents monitored all relevant regulatory sources including proposed and final rules, guidance documents and FAQs, enforcement actions and consent orders, regulatory examination priorities and focus areas, speeches and Congressional testimony by regulators, and industry commentary and legal analysis. Agents assessed relevance to the bank’s business activities across retail banking, commercial lending, wealth management, and mortgage origination. For relevant items, agents identified specific compliance obligations with requirement extraction, estimated implementation timeline and resources, assessed impact on current practices, identified responsible business units, and flagged items requiring legal review based on complexity or ambiguity. The system generated prioritized action lists with risk-based ranking, maintained a regulatory change log accessible across the compliance organization, provided dashboards showing compliance obligation status, and created a compliance calendar with upcoming deadlines. Integration with project management tools enabled tracking implementation through completion.

Results: The bank achieved 100% capture rate of applicable regulatory changes versus 85% historical rate under manual monitoring, verified through an external audit. Compliance team capacity increased by 40% as staff redirected time from monitoring to implementation, risk assessment, and control testing. Response time to new regulatory requirements decreased from 45 days on average to 15 days. Regulatory examination findings related to compliance awareness decreased by 60%. Most significantly, the system identified an obscure state regulatory change affecting overdraft practices that would have created significant compliance risk and potential enforcement action had it been missed, with estimated cost avoidance of $5 million. Board and audit committee confidence in regulatory compliance monitoring increased substantially. The system also provided unexpected strategic value by identifying regulatory trends across jurisdictions, enabling proactive advocacy through industry associations and better long-term planning.

Key Lessons: Regulatory domain complexity required extensive training data and human validation, with initial accuracy of 75% improving to 96% after 12 months of feedback incorporation. The team developed a feedback loop where compliance officers marked agent assessments of relevance and materiality as accurate or inaccurate, with each correction improving future performance. They learned that conservative tuning with a higher false positive rate but a lower false negative rate proved appropriate for regulatory compliance, where missing a requirement carried severe consequences. Over-alerting on possible relevance was acceptable as human review efficiently filtered false positives, while missing important requirements created unacceptable risk. The system’s value extended beyond compliance monitoring to strategic intelligence through regulatory trend analysis, horizon scanning for likely future regulations, and benchmarking against regulatory focus at peer institutions. Integration with implementation project management closed the loop from monitoring through compliance, preventing requirements from being identified but not acted upon. Natural language search of the regulatory knowledge base provided unexpected value, enabling staff to quickly research precedent and guidance on specific topics. The bank discovered that systematic regulatory intelligence capabilities provided a competitive advantage through faster adaptation to regulatory changes and better regulatory relationship management. Cost-benefit analysis showed 10x return on investment within 18 months through a combination of avoided compliance failures, increased staff productivity, and reduced external legal spend on regulatory monitoring services.

Case Study 4: Patent Landscape for Pharmaceutical R&D

Background: A mid-sized pharmaceutical company with a development pipeline of 15 programs needed a comprehensive patent landscape analysis to assess freedom-to-operate and identify competitive threats before initiating expensive clinical trials. Traditional patent searches by specialized IP law firms cost $50,000-$100,000 per landscape analysis and require 6-8 weeks for delivery, creating bottlenecks in the innovation pipeline. The company typically conducted 8-10 patent landscapes annually, consuming $600,000-$800,000 of the IP budget and creating 2-3 month delays in program decisions. This slow, expensive process meant the company often made significant R&D investments before completing comprehensive patent due diligence, creating the risk of discovering blocking patents after substantial investment. The bottleneck also prevented opportunistic exploration of adjacent chemical space or alternative mechanisms that might circumvent patent barriers.

Implementation: Research agents analyzed multiple data sources including global patent databases covering US, EP, and major jurisdictions, published patent applications, scientific literature describing related compounds, clinical trial registries showing competitive programs, and regulatory filings. Agents identified relevant patents based on chemical structure similarity using substructure and similarity search, therapeutic indication and target disease, mechanism of action, and compound class. For each relevant patent, agents mapped patent families across jurisdictions, assessed patent strength through claim analysis, identified key claims relevant to program, evaluated prior art that might impact validity, and profiled competitive patent portfolios. The system generated preliminary freedom-to-operate assessments with risk level scoring and highlighted patents requiring detailed legal analysis due to high relevance or broad claims. Integration with chemical structure databases enabled automated structure-based searching.

Results: Preliminary patent landscape analyses that previously took 6-8 weeks are now completed in 3 days, enabling faster program decisions. Cost per preliminary analysis decreased from $75,000 to $5,000 including required follow-on legal review of flagged patents, representing 93% cost reduction. The company increased annual patent landscape volume to 35-40 analyses without increasing IP budget, evaluating 4x more potential development programs and chemical series. More comprehensive patent due diligence earlier in development reduced late-stage surprises. Importantly, the system identified a previously undetected patent covering a close structural analog that would have blocked a major development program, saving an estimated $15 million in wasted R&D investment that would have been spent before traditional patent review. The system enabled rapid exploration of alternative chemical space when patents were identified, helping chemists design around IP barriers. Portfolio management improved as the team maintained current awareness of competitive patent activity across the entire therapeutic area. Time from program concept to patent-cleared development increased from 3 months to 2 weeks, significantly accelerating innovation cycles.

Key Lessons: Domain specialization proved essential, as generic research agents struggled with patent-specific terminology, chemical structure representation, and patent citation patterns. The company developed specialized training focusing on patent documents, chemical structures, and relevant prior art. They worked with IP attorneys to validate agent assessments and develop quality rubrics specific to patent analysis. The team learned that agent value came from efficient initial screening rather than replacing legal expertise, with 80% of patents dismissed as clearly irrelevant, 15% requiring careful analysis, and 5% potentially blocking. IP attorneys dramatically increased productivity by focusing time on the 20% requiring detailed review rather than manually reviewing hundreds of potentially relevant patents. Accuracy in relevance determination improved from 78% initially to 94% after iterative refinement. The breakthrough came from recognizing agents as specialized research assistants rather than attempting full automation of patent analysis. Chemical structure search required specialized algorithms beyond text matching, necessitating integration with cheminformatics tools. The system also provided strategic value beyond individual program analysis through competitive intelligence on competitor patent strategies, white space identification for future R&D, and detection of emerging patent risks to existing products. The company realized that systematic patent intelligence capabilities provided a sustainable competitive advantage in an industry where IP landscape navigation determines success.

Case Study 5: Consumer Insights for CPG Product Development

Background: A consumer packaged goods company with $2 billion in annual revenue and a portfolio of food and beverage products needed continuous consumer insight generation to inform product development, positioning, and marketing strategies. Traditional market research through surveys, focus groups, and ethnographic studies cost $75,000-$150,000 per study and takes 3-4 months from project initiation to final report. The company typically conducted 15-20 studies annually, consuming $1.5-$2 million of the insights budget. This slow, expensive process limited research to major decisions while leaving many tactical and exploratory questions unanswered. Product teams wanted faster feedback on concept tests, needed earlier warning of emerging consumer trends, and desired more continuous tracking of competitive product reception. The lag between consumer trend emergence and company awareness created the risk of missing important shifts or responding after competitors.

Implementation: Research agents analyzed consumer voice across multiple digital sources including social media discussions on platforms like Instagram, TikTok, Twitter, and Facebook, product reviews on retail sites like Amazon and Target, online forums and communities focused on food, health, and wellness, trend publications and influencer content, YouTube product reviews and unboxing videos, recipe sites showing product usage, and Reddit communities discussing products and brands. Agents tracked multiple dimensions including sentiment around product categories and specific products, unmet needs and desired product attributes, emerging preferences and trends, consumer language and terminology, influencer opinions and trending topics, and competitive product reception. The system generated hypothesis-rich reports identifying potential opportunities, tracked sentiment over time for early trend detection, and created segmented analysis showing varying perspectives across demographic and psychographic segments. Natural language interface enabled product teams to ask specific questions and receive rapid research.

Results: Time-to-insight decreased from 3-4 months to 2-3 days for exploratory research questions, enabling much faster product development cycles. The company identified an emerging consumer preference for savory flavors in a traditionally sweet product category, leading to a successful product line extension that generated $30 million in first-year revenue and captured shelf space before competitors recognized the opportunity. Market research budget allocation shifted toward validation studies of agent-identified opportunities rather than expensive exploratory research, increasing research efficiency. Product teams reported making more informed decisions earlier in development, reducing costly late-stage pivots by 35%. Category managers gained competitive intelligence on new product launches within days versus weeks, enabling faster competitive response. The system detected emerging ingredient concerns (specific artificial additives) 6 months before mainstream media coverage, enabling proactive reformulation that maintained brand trust. Social listening identified unexpected product usage occasions that informed new marketing campaigns. Influencer relationship strategy improved through identification of authentic brand advocates versus paid partnerships.

Key Lessons: Social listening data provided speed and breadth but required careful interpretation to avoid bias. Agents detected authentic trends and patterns that traditional research missed, but online discussions skewed younger, more tech-savvy, and more vocal than representative consumers. The company learned to use agent research for hypothesis generation and opportunity identification, then validated findings through traditional research methods, ensuring representativeness. This hybrid approach combined speed and breadth of AI research with rigor and representativeness of primary research, dramatically improving research productivity. The most valuable agent capability was trend spotting and early warning rather than validation, as the system excelled at detecting weak signals before they became obvious. Sentiment analysis required careful calibration, as raw sentiment scores missed important context and nuance. The team developed domain-specific sentiment models trained on food and beverage discussions with human-validated examples. Integration with traditional research created powerful workflows where agents identified opportunities that quantitative surveys validated and qualitative research deepened. Consumer language analysis proved unexpectedly valuable for marketing messaging, revealing terminology and framing that resonated versus industry jargon that confused. Geographic and demographic segmentation of insights enabled more targeted product development and marketing. The company realized that continuous consumer intelligence provided a sustainable advantage in fast-moving categories where speed to market with relevant innovation determined category leadership. ROI analysis showed 15x return within 24 months through a combination of successful new products, avoided bad investments, and faster time-to-market.

Quality Control: Ensuring Research Reliability

Research quality determines whether AI agents deliver value or liability. Poor quality research creates false confidence, leading to bad decisions with real consequences including misallocated capital, failed product launches, missed competitive threats, regulatory violations, and strategic missteps. Establishing robust quality control mechanisms separates professional deployments from experimental prototypes. Organizations must implement systematic approaches to measuring, monitoring, and improving agent research quality that scale as agent usage grows.

Dimensions of Research Quality

Quality assessment must address multiple dimensions, each requiring distinct measurement approaches and improvement strategies.

Accuracy represents the foundation of research quality. Do agent findings reflect truth? Accuracy assessment requires verification against ground truth through fact-checking against authoritative sources, cross-referencing claims across independent sources, subject matter expert review providing domain validation, and temporal validation ensuring information remains current. Organizations should maintain accuracy metrics tracking false positives where agents claim something true that is false, false negatives where agents miss important information, factual errors in specific claims, and citation errors where sources don’t support claims.

Completeness measures whether research addresses all relevant dimensions. Incomplete research creates blind spots undermining decision quality. Assessment requires comparing agent output against comprehensive checklists, gap analysis by domain experts, coverage metrics tracking what percentage of relevant sources were consulted, and user feedback on whether critical questions went unanswered.

Currency evaluates information recency and relevance. Outdated research misleads as severely as inaccurate research. Quality control must track source publication dates, identify use of superseded information, flag research relying on outdated data, and verify that time-sensitive claims reflect current state. Time-sensitive domains like competitive intelligence or regulatory compliance demand particularly rigorous currency verification.

Source quality determines research reliability. Research synthesizing credible, authoritative sources merits higher confidence than research drawing from questionable sources. Quality control should assess source diversity ensuring multiple independent perspectives, source authority based on author expertise and publication venue, source independence identifying potential bias or conflicts of interest, and source currency for time-sensitive topics. Systematic source quality tracking identifies agents developing problematic source preferences.