Chain-of-Thought Prompting Guide: Master CoT for LLM Super-Reasoning

Large Language Models (LLMs) have revolutionized text generation, but have you noticed them stumble on complex logic or multi-step problems? It’s like asking a brilliant student for an answer without showing their work. They might get it right, but you don’t know how, and they’re more prone to errors when the problem gets tough. This is the LLM’s reasoning dilemma. It can stuck on a specific problem anytime. In today’s AI revolutionary era, Prompt Engineering has become a most in demand skill for developers and business owners. LLM’s is totally dependent on the prompt you are giving to it. In fact, it is totally bound to your prompt.

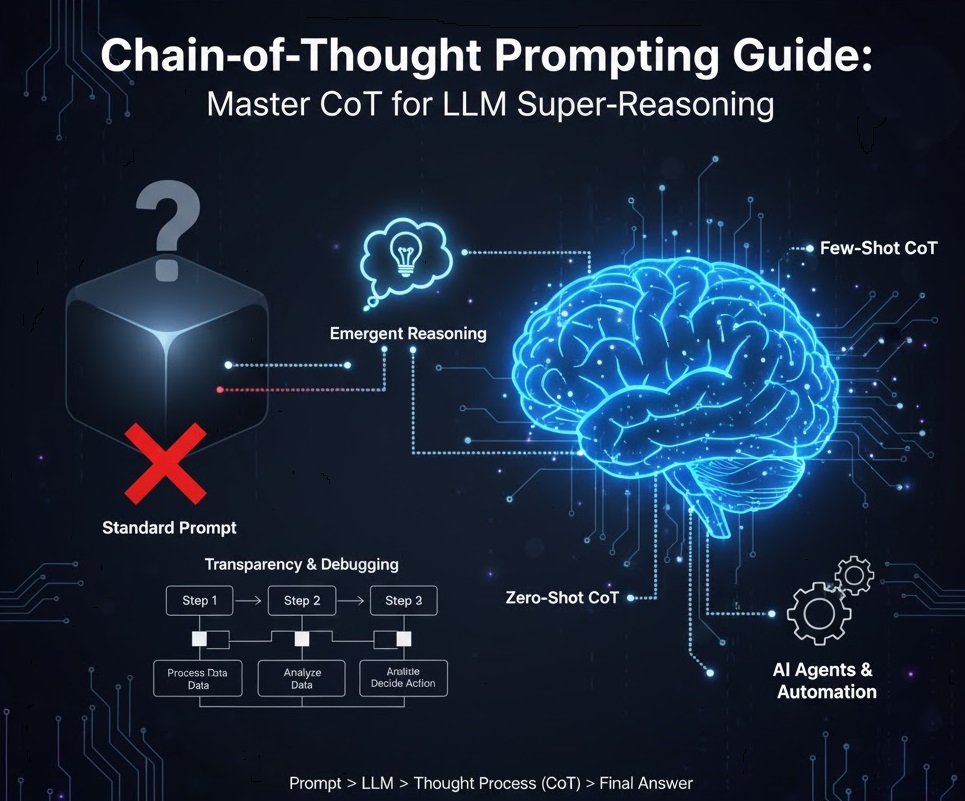

Enter Chain-of-Thought (CoT) Prompting:

CoT prompting is a groundbreaking prompt engineering technique that transforms how LLMs process information. Instead of merely generating a final answer, CoT instructs the model to “think step by step” breaking down complex tasks into a sequence of intermediate, logical steps. This simple yet profound approach unlocks an LLM’s true analytical capabilities, dramatically improving accuracy and making its decision-making process transparent.

This ultimate Chain-of-Thought Prompting Guide will deep dive into the core mechanics of CoT, unveil its “secrets,” explore its different types, weigh the pros and cons, and showcase its critical role in advanced AI applications like AI Agents and automation workflows. By the end, you’ll be equipped to master CoT for LLM super-reasoning.

1. The Core “Secret”: How Chain-of-Thought Unlocks LLM Intelligence

The “secret” behind CoT’s effectiveness isn’t a complex algorithm; it’s a clever cognitive trick that mirrors human problem-solving. By demanding intermediate steps, CoT leverages the LLM’s architecture in powerful ways.

1.1. Decomposing Complexity: The “Scratchpad” Effect

At its heart, CoT prompting encourages the LLM to decompose multi-step problems into smaller, more manageable sub-problems. Think of it as providing the LLM with a mental scratchpad. Instead of attempting a single, giant leap to the answer, it takes sequential, justified steps. This dramatically reduces the likelihood of errors at each stage.

Technical Insight: When an LLM generates these intermediate thoughts, it effectively uses its own extensive context window as a computational workspace. This allows the model to allocate more “compute” or “thought” to the reasoning process, leading to a more robust and accurate solution.

1.2. The Emergent Reasoning Ability of LLMs

A fascinating aspect of CoT is its connection to the “emergent abilities” of LLMs. This refers to capabilities that only manifest once a model reaches a certain scale (i.e., parameter count). The ability to perform Chain-of-Thought reasoning is one such emergent capability, meaning it primarily works on larger, more sophisticated models like GPT-4, Claude 3, or Llama 2 (70B+).

For professional prompt engineers, this is a vital takeaway: relying on CoT automatically signals a minimum baseline for model selection for critical applications.

1.3. Enhancing Transparency and Interpretability

One of the most understated benefits of CoT prompting is the transparency it brings. By forcing the LLM to output its reasoning steps, we gain a crucial window into the model’s logic. This interpretability is invaluable for:

- Debugging: Pinpointing exactly where an AI Agent’s workflow might have veered off course.

- Trust Building: Understanding why an LLM arrived at a conclusion, which is essential for regulated industries or critical applications.

- Compliance: Meeting explainability requirements for AI systems.

2. Types of CoT Prompting: Choosing Your Approach

Effective prompt engineering means knowing which CoT method to deploy based on your task’s complexity and your chosen LLM’s capabilities.

2.1. Zero-Shot Chain-of-Thought (Zero-Shot CoT): The Simple Trigger

This is the most straightforward and often surprisingly effective form of CoT prompting. It requires minimal effort from the user.

- How it Works: You simply provide your question or task, then append a specific phrase that subtly “triggers” the model’s step-by-step reasoning process.

- The Magic Phrase: The most common and effective trigger is: “Let’s think step by step.“

- Pros:

- Minimal Effort: Easiest to implement; no examples needed.

- Highly Effective: Works remarkably well on modern, powerful LLMs due to their advanced emergent abilities.

- Cons:

- Less Reliable: May be less consistent for highly specialized, nuanced, or truly novel tasks compared to few-shot.

- Model Dependent: Relies entirely on the model’s inherent ability to reason without explicit demonstrations.

- Practical Example:

- Prompt: “If a company had 200 employees and grew by 15% last year, and then hired 25 more this year, how many employees do they have now? Let’s think step by step.“

- LLM Output:

- Thought 1: Calculate 15% growth.

- Thought 2: Add the growth to the initial number.

- Thought 3: Add the new hires.

- Final Answer: 255 employees.

2.2. Few-Shot Chain-of-Thought (Few-Shot CoT): Learning by Example

For more complex, customized, or domain-specific tasks, Few-Shot CoT is the gold standard.

- How it Works: The user provides one or more complete examples within the prompt. Crucially, each example showcases not just the problem and its final answer, but also the full step-by-step reasoning process used to arrive at that answer.

- Pros:

- Significant Performance Boost: Dramatically enhances accuracy and reliability on complex, specialized tasks.

- Teaches Format: Instills a specific reasoning style, depth, or desired output format in the model.

- Ideal for Specialization: Perfect for custom Prompt Engineering in niche domains.

- Cons:

- Token Consumption: Providing examples increases prompt length, leading to higher API costs and potentially increased latency.

- Manual Effort: Requires careful, manual crafting of high-quality, diverse examples.

- Practical Example (Abbreviated):

- Example 1:

- Problem: Analyze sentiment of “This service was awful but the staff were lovely.”

- Steps: 1) Identify “awful service” (negative). 2) Identify “staff were lovely” (positive). 3) Conclude mixed sentiment.

- Result: Mixed.

- New Problem: “Analyze sentiment of ‘The product worked, but delivery was a nightmare.'”

- LLM Output: The model will now follow the multi-step pattern you provided to analyze the new problem.

- Example 1:

2.3. Advanced Variation: Auto-CoT

For those looking to automate the creation of effective few-shot examples, Auto-CoT is an advanced method. It typically uses Zero-Shot CoT to automatically generate reasoning chains for a set of diverse examples, clusters similar problems, and then leverages these automatically generated, high-quality examples to create robust Few-Shot CoT prompts at scale.

3. Pros and Cons: Weighing the Trade-Offs of CoT Prompting

While Chain-of-Thought Prompting is a game-changer, professional deployment requires understanding its benefits and limitations.

| Aspect | Pros (Why You Should Master CoT) | Cons (When CoT Falls Short) |

| Accuracy & Robustness | Massively increases accuracy on multi-step reasoning, logical inference, and complex mathematical problems. Leads to fewer errors and more reliable outputs. | Misleading Reasoning: While steps are provided, they don’t always reflect true internal cognition. The LLM can still “hallucinate” logical-looking but incorrect steps, making the output deceptively plausible. |

| Interpretability & Debugging | Provides crucial transparency, allowing you to debug the model’s thought process. This builds trust and is vital for auditing AI Agent decisions and regulatory compliance. | Slower & More Costly: Generating extra “thought” tokens significantly increases API latency and incurs higher token costs, especially with Few-Shot CoT. |

| Versatility | Applicable across a wide array of complex domains, from scientific problem-solving and legal analysis to complex code generation and strategic planning. | Model Dependency: Less effective, inconsistent, or outright fails on smaller, less capable LLMs. It’s truly an emergent ability of larger models. |

| Ease of Adoption (Zero-Shot) | Zero-Shot CoT is arguably the simplest yet most impactful prompt enhancement available, requiring just a few extra words. | Overthinking Simple Tasks: For very simple, direct questions (e.g., “What is the capital of France?”), CoT adds unnecessary latency and complexity without improving accuracy. |

4. Real-World Impact: CoT as the Engine for Advanced AI

Chain-of-Thought Prompting is more than just a clever trick; it’s a foundational capability that powers the next generation of advanced AI applications.

4.1. The Core of Agentic AI Systems

While the Chain-of-Thought (CoT) pattern is conceptual, you rarely need to code the iterative loop from scratch. Modern LLM orchestration libraries handle this complexity for you. For instance, implementing the “Thought” and “Action” sequence is highly streamlined with CoT prompting in LangChain. LangChain provides built-in Agents and Tools modules designed to manage the conversation history, expose available tools to the LLM, and correctly parse the Thought (CoT) and Action output, ensuring the agent follows the ReAct architecture seamlessly. By leveraging these framework components, developers can focus on defining robust tools and precise prompts rather than managing the underlying loop logic.

Perhaps the most critical application of CoT lies in Agentic AI Systems. CoT literally forms the “Thought” step in the industry-standard ReAct Pattern (Reasoning $\rightarrow$ Action $\rightarrow$ Observation). An AI Agent must reason (using CoT) before it can decide on an action (like using a tool or API). This internal monologue ensures the agent can:

- Plan: Develop a multi-step approach to a complex goal.

- Self-Correct: Identify and recover from errors or unexpected observations.

- Strategize: Make informed decisions about which external tools to use.

4.2. Complex Data Analysis and Decision Support

Imagine an LLM tasked with analyzing complex financial reports. With CoT, you can instruct it to:

- Calculate specific metrics (e.g., Year-over-Year revenue growth).

- Identify significant anomalies or top-performing segments.

- Suggest potential reasons or actionable insights based on the analysis.

Without CoT, the model often struggles to perform all these steps accurately in sequence, leading to less reliable insights.

4.3. Structured Code Generation and Debugging

For developers, CoT is invaluable. Instead of just asking for a complex script, you can add instructions like:

“First, outline the five core functions this code will require, explaining the purpose of each. Then, provide the complete Python script incorporating these functions.”

This approach guarantees a more structured, logically planned code output that is significantly easier to audit, understand, and debug compared to a raw, unreasoned block of code. Want to master yourself in deep research. Here’s a complete deep research guide vital for research engineers.

Conclusion: Master CoT, Master the Future of LLMs

Chain-of-Thought Prompting is not merely an optimization; it’s a fundamental shift in how we interact with and extract true intelligence from Large Language Models. It unlocks the “super-reasoning” capabilities that differentiate a text generator from a sophisticated problem-solver.

By understanding and implementing CoT, you gain the power to:

- Build more accurate and reliable AI applications.

- Enhance the transparency of complex AI decisions.

- Lay the groundwork for truly autonomous AI Agents.

To truly master modern AI, embracing CoT is non-negotiable. Start by appending “Let’s think step by step” to your most complex prompts today, and witness the immediate improvement. Ready to see how this powerful reasoning translates into action? Dive deeper into our AI Agents category, where we explore how CoT powers autonomous decision-making in real-world, multi-step workflows.

Ready to implement CoT in your AI projects? Which specific workflow are you eager to optimize first with step-by-step reasoning?

Related Articles:

AI Prompting for Beginners: Complete Guide

Recent Comments